Striking a Balance: The Power of AI in Social Media Content Moderation in 2023

Table of Contents

Introduction

Social media platforms have become integral to our daily lives, providing a space for connection, communication, and expression. However, this digital realm also brings challenges, particularly when it comes to ensuring a safe and respectful online environment. The sheer volume of content posted on these platforms makes manual moderation a daunting task. Enter Artificial Intelligence (AI), a groundbreaking solution that is transforming social media content moderation. In this blog, we delve into the world of AI-driven content moderation, exploring its benefits, challenges, and crucial role in fostering a positive online community.

The Challenge of Content Moderation

Social media platforms are hubs of diverse content, ranging from personal updates to news articles, memes, and videos. While these platforms enable free expression, they also host content that violates community guidelines, promotes hate speech, or contains explicit material. Manual content moderation, while essential, can be overwhelming, time-consuming, and subject to human biases.

AI-Powered Content Moderation: How It Works

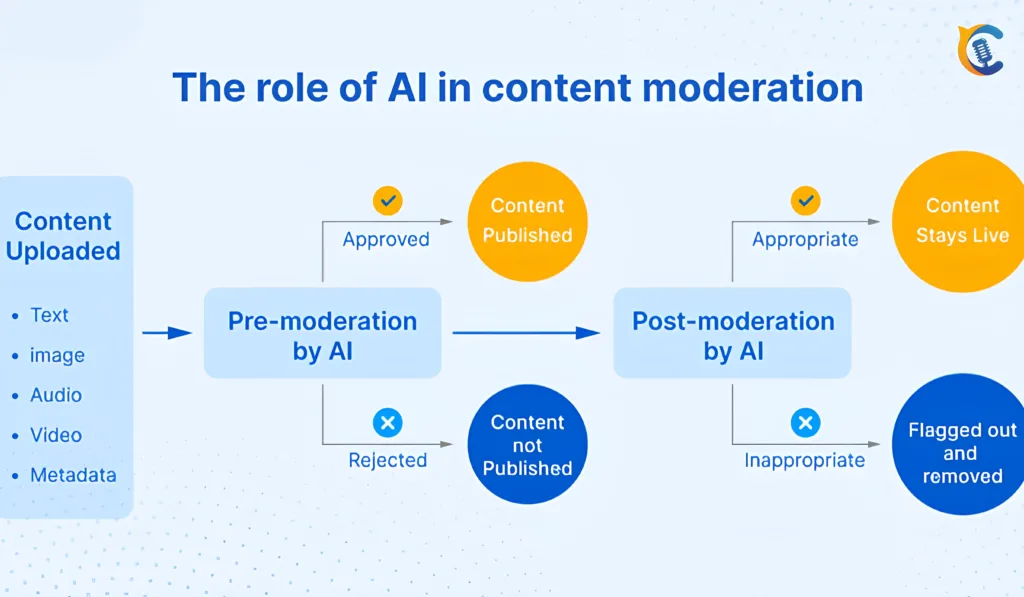

AI-driven content moderation involves training algorithms to identify and flag inappropriate content based on predefined criteria. This technology relies on machine learning and natural language processing to analyse text, images, videos, and audio for patterns that indicate potential violations.

Benefits of AI in Social Media Content Moderation

- Scale and Speed: AI can process vast amounts of content in real time, significantly enhancing moderation efficiency and response times.

- Consistency: AI applies guidelines uniformly, minimizing discrepancies that may arise with human moderators.

- 24/7 Monitoring: AI can provide around-the-clock content monitoring, ensuring that potentially harmful material is identified and addressed promptly.

- Language Diversity: AI can analyze content in multiple languages, facilitating a global reach in moderation efforts.

- Reducing Human Exposure: AI filters out explicit or disturbing content, minimizing the emotional toll on human moderators.

Challenges and Considerations

While AI offers significant advantages, challenges persist:

- False Positives and Negatives: AI algorithms may over-identify or miss certain types of content, leading to false positives (innocent content flagged) or false negatives (inappropriate content missed).

- Context and Nuance: AI struggles to grasp context and cultural nuances, often resulting in misinterpretation of content intent.

- Adversarial Attacks: Malicious users may attempt to deceive AI systems by subtly altering content to evade detection.

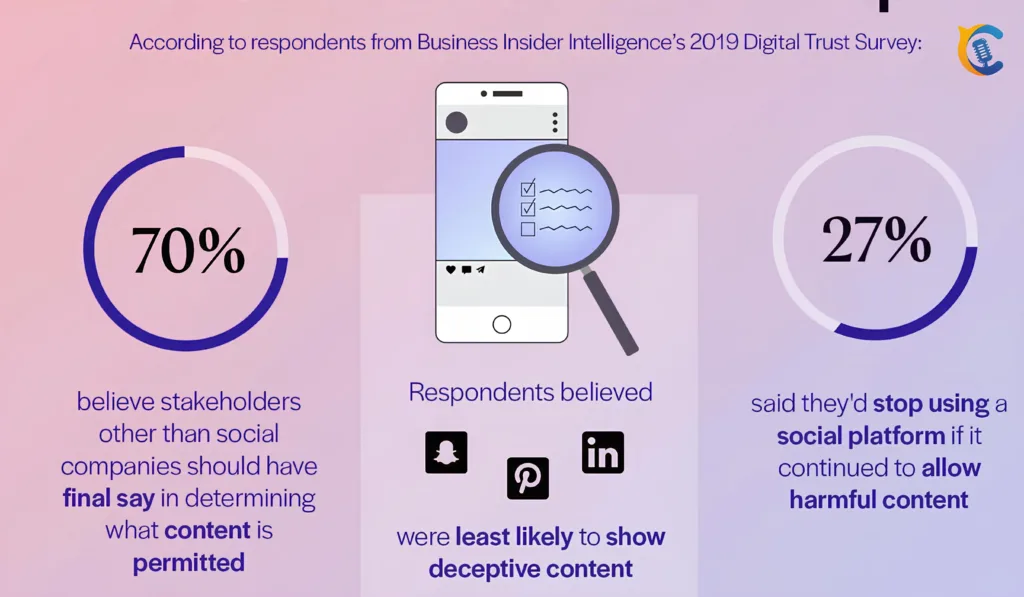

- Ethical Concerns: Deciding what content is appropriate involves subjective judgments, raising ethical questions about AI’s role in determining online speech.

The Future of AI-Driven Content Moderation

As technology advances, the future of AI in social media content moderation holds exciting possibilities:

- Contextual Understanding: AI models are evolving to better understand context and distinguish between harmful and innocuous content.

- Adaptive Learning: AI systems will continuously learn and adapt from new data, improving accuracy over time.

- Human-AI Collaboration: A combined effort of AI algorithms and human moderators will likely strike the ideal balance, allowing AI to flag potential issues while humans assess context.

Implementing AI for Social Media Content Moderation: Best Practices

As the adoption of AI for social media content moderation continues to grow, it’s important to establish best practices to ensure effective implementation and address potential challenges. Here are some key strategies for leveraging AI in content moderation:

- Hybrid Moderation Approach: While AI can significantly enhance content moderation, it’s essential to combine its capabilities with human expertise. Human moderators bring contextual understanding, cultural awareness, and nuanced decision-making that AI might struggle with. Implement a hybrid approach that combines the strengths of both AI and human moderators for optimal results.

- Continuous Training and Improvement: AI algorithms need continuous training and refinement to adapt to evolving online trends, emerging language, and new forms of harmful content. Regularly update and retrain your AI models to ensure accuracy and effectiveness.

- Contextual Analysis: Enhance AI’s understanding of context by integrating sentiment analysis and contextual cues. This can help reduce false positives by identifying content that might seem harmful at first glance but is harmless in context.

- User Empowerment: Give users the ability to report and provide feedback on content moderation decisions. This not only improves the AI’s performance over time but also promotes a sense of community involvement in maintaining a safe online environment.

- Ethical Guidelines and Transparency: Establish clear ethical guidelines for AI content moderation, outlining the criteria for content removal, warning labels, and appeals process. Ensure transparency in AI decision-making by providing explanations for flagged content.

- Adaptive Filters: Implement adaptive filters that allow users to customize their content moderation preferences. This empowers users to tailor their online experience while maintaining a certain level of safety.

- Collaboration and Knowledge Sharing: Foster collaboration among social media platforms, researchers, and AI developers to share insights, best practices, and advancements in content moderation technology. A collective effort can drive innovation and more effective moderation strategies.

- Diverse Data and Inclusive Training: Train AI models on diverse and representative datasets to mitigate bias and ensure fair moderation. Include a wide range of perspectives and cultural contexts to avoid favoring one group over another.

Looking Ahead: Future Trends in AI-Powered Content Moderation

The field of AI for social media content moderation is continually evolving, with several exciting trends on the horizon:

- Multilingual Moderation: AI will become more adept at understanding and moderating content in various languages, addressing the global nature of social media platforms.

- Visual Content Analysis: AI will advance in analyzing visual content, including images and videos, to identify harmful or inappropriate material.

- Real-Time Sentiment Analysis: AI will incorporate real-time sentiment analysis to better understand user intentions and emotions, leading to more nuanced content moderation decisions.

- Customizable Moderation: Users will have increased control over the moderation settings, allowing them to define their own tolerance levels for different types of content.

- Contextual Awareness: AI models will improve their contextual understanding, reducing false positives and negatives by considering the broader context of posts.

AI in social media moderation key facts

- AI improves efficiency: AI algorithms swiftly scan and filter social media content, enhancing moderation speed.

- Content categorization: AI accurately labels posts, aiding in effective identification of harmful or inappropriate content.

- Reduced human workload: AI lessens the burden on human moderators, leading to better mental well-being.

- Enhanced accuracy: AI recognizes subtle patterns, minimizing false positives and negatives in content assessment.

- Multilingual support: AI’s language capabilities allow moderation across diverse languages, ensuring global coverage.

- Real-time monitoring: AI provides instantaneous content review, enabling quicker response to emerging issues.

- Contextual understanding: AI interprets context, discerning between harmless banter and offensive speech.

- Evolving adaptability: AI learns from new data, adapting to evolving trends in online conversations.

- Addressing scale: AI manages vast amounts of content, ensuring thorough scrutiny of platforms with high user activity.

- Ethical considerations: AI moderation raises concerns about biased decisions and potential censorship, necessitating careful oversight.

Conclusion

AI-powered content moderation is a game-changer for maintaining a safe and positive online environment on social media platforms. While challenges remain, the benefits of scalability, speed, and consistency are reshaping the landscape of content moderation. By implementing best practices, addressing ethical considerations, and staying attuned to emerging trends, social media platforms can harness the full potential of AI while safeguarding user experiences and promoting responsible online interactions. As technology advances, the collaboration between AI and human moderators will continue to play a crucial role in shaping the future of content moderation. Click Here

- Arsenal team formation 2023: A Comprehensive Overview 2023

- Greece’s Epic Fight Against Devastating Wildfires

- NASA Shares First Images of US Pollution

- AIRCRAFT CRASHES IN AUSTRALIA’S NORTHERN TERRITORY DURING US MILITARY TRAINING EXERCISE

- Further Insights into the Top 20 US Cities with Highest Internet Usage